Quick Install

Download the package from the official website Apache Solr the uncompress and continue with the following:

JAVA_HOME=/opt/jdk1.8.0_211/

PATH=/usr/local/bin:/usr/bin:/bin:/usr/local/games:/usr/games:/opt/jdk1.8.0_211/

export JAVA_HOME

export PATH

Zookepper

ZooKeeper is a centralized service for maintaining configuration

information, naming, providing distributed synchronization,

and providing group services. All of these kinds of services

are used in some form or another by distributed applications.

Each time they are implemented there is a lot of work that goes

into fixing the bugs and race conditions that are inevitable.

Because of the difficulty of implementing these kinds of services,

applications initially usually skimp on them,

which make them brittle in the presence of change and difficult to manage.

Even when done correctly, different implementations of these services lead

to management complexity when the applications are deployed.

Usage: solr COMMAND OPTIONS

where COMMAND is one of: start, stop, restart, status, healthcheck, create, create_core, create_collection, delete, version, zk, auth, assert, config, autoscaling

Standalone server example (start Solr running in the background on port 8984):

./solr start -p 8984

SolrCloud example (start Solr running in SolrCloud mode using localhost:2181 to connect to Zookeeper, with 1g max heap size and remote Java debug options enabled):

./solr start -c -m 1g -z localhost:2181 -a "-Xdebug -Xrunjdwp:transport=dt_socket,server=y,suspend=n,address=1044"

Omit ‘-z localhost:2181’ from the above command if you have defined ZK_HOST in solr.in.sh.

Help on the start

user@debian:~/Downloads/solr-8.1.1/bin$ ./solr start -help

Usage: solr start [-f] [-c] [-h hostname] [-p port] [-d directory] [-z zkHost] [-m memory] [-e example] [-s solr.solr.home] [-t solr.data.home] [-a “additional-options”] [-V]

-f Start Solr in foreground; default starts Solr in the background

and sends stdout / stderr to solr-PORT-console.log

-c or -cloud Start Solr in SolrCloud mode; if -z not supplied and ZK_HOST not defined in

solr.in.sh, an embedded ZooKeeper instance is started on Solr port+1000,

such as 9983 if Solr is bound to 8983

-h Specify the hostname for this Solr instance

-p Specify the port to start the Solr HTTP listener on; default is 8983

The specified port (SOLR_PORT) will also be used to determine the stop port

STOP_PORT=($SOLR_PORT-1000) and JMX RMI listen port RMI_PORT=($SOLR_PORT+10000).

For instance, if you set -p 8985, then the STOP_PORT=7985 and RMI_PORT=18985

-d Specify the Solr server directory; defaults to server

-z Zookeeper connection string; only used when running in SolrCloud mode using -c

If neither ZK_HOST is defined in solr.in.sh nor the -z parameter is specified,

an embedded ZooKeeper instance will be launched.

-m Sets the min (-Xms) and max (-Xmx) heap size for the JVM, such as: -m 4g

results in: -Xms4g -Xmx4g; by default, this script sets the heap size to 512m

-s Sets the solr.solr.home system property; Solr will create core directories under

this directory. This allows you to run multiple Solr instances on the same host

while reusing the same server directory set using the -d parameter. If set, the

specified directory should contain a solr.xml file, unless solr.xml exists in Zookeeper.

This parameter is ignored when running examples (-e), as the solr.solr.home depends

on which example is run. The default value is server/solr. If passed relative dir,

validation with current dir will be done, before trying default server/

-t Sets the solr.data.home system property, where Solr will store index data in /data subdirectories.

If not set, Solr uses solr.solr.home for config and data.

-e Name of the example to run; available examples:

cloud: SolrCloud example

techproducts: Comprehensive example illustrating many of Solr’s core capabilities

dih: Data Import Handler

schemaless: Schema-less example

-a Additional parameters to pass to the JVM when starting Solr, such as to setup

Java debug options. For example, to enable a Java debugger to attach to the Solr JVM

you could pass: -a “-agentlib:jdwp=transport=dt_socket,server=y,suspend=n,address=18983”

In most cases, you should wrap the additional parameters in double quotes.

-j Additional parameters to pass to Jetty when starting Solr.

For example, to add configuration folder that jetty should read

you could pass: -j “–include-jetty-dir=/etc/jetty/custom/server/”

In most cases, you should wrap the additional parameters in double quotes.

-noprompt Don’t prompt for input; accept all defaults when running examples that accept user input

-v and -q Verbose (-v) or quiet (-q) logging. Sets default log level to DEBUG or WARN instead of INFO

-V/-verbose Verbose messages from this script

Help on the stop

user@debian:~/Downloads/solr-8.1.1/bin$ ./solr stop -help

Usage: solr stop [-k key] [-p port] [-V]

-k Stop key; default is solrrocks

-p Specify the port the Solr HTTP listener is bound to

-all Find and stop all running Solr servers on this host

-V/-verbose Verbose messages from this script

NOTE: To see if any Solr servers are running, do: solr status

Start Cloud Mode EXAMPLE MODE

./solr start -e cloud

Full check on the Solr nodes

user@debian:~/Downloads/solr-8.1.1/bin$ ./solr healthcheck -c rafaels_cloud -V

INFO – 2019-07-15 11:50:33.440; org.apache.solr.common.cloud.ConnectionManager; zkClient has connected

INFO – 2019-07-15 11:50:33.467; org.apache.solr.common.cloud.ZkStateReader; Updated live nodes from ZooKeeper… (0) -> (2)

INFO – 2019-07-15 11:50:33.504; org.apache.solr.client.solrj.impl.ZkClientClusterStateProvider; Cluster at localhost:9983 ready

{

“collection”:”rafaels_cloud”,

“status”:”healthy”,

“numDocs”:0,

“numShards”:2,

“shards”:[

{

“shard”:”shard1″,

“status”:”healthy”,

“replicas”:[

{

“name”:”core_node3″,

“url”:”http://127.0.1.1:8983/solr/rafaels_cloud_shard1_replica_n1/”,

“numDocs”:0,

“status”:”active”,

“uptime”:”0 days, 0 hours, 12 minutes, 26 seconds”,

“memory”:”267.5 MB (%52.2) of 512 MB”,

“leader”:true},

{

“name”:”core_node6″,

“url”:”http://127.0.1.1:7574/solr/rafaels_cloud_shard1_replica_n2/”,

“numDocs”:0,

“status”:”active”,

“uptime”:”0 days, 0 hours, 12 minutes, 15 seconds”,

“memory”:”172.4 MB (%33.7) of 512 MB”}]},

{

"shard":"shard2",

"status":"healthy",

"replicas":[

{

"name":"core_node7",

"url":"http://127.0.1.1:8983/solr/rafaels_cloud_shard2_replica_n4/",

"numDocs":0,

"status":"active",

"uptime":"0 days, 0 hours, 12 minutes, 26 seconds",

"memory":"267.9 MB (%52.3) of 512 MB",

"leader":true},

{

"name":"core_node8",

"url":"http://127.0.1.1:7574/solr/rafaels_cloud_shard2_replica_n5/",

"numDocs":0,

"status":"active",

"uptime":"0 days, 0 hours, 12 minutes, 15 seconds",

"memory":"173.2 MB (%33.8) of 512 MB"}]}]}Starting Cloud

./solr start -cloud -p 8983 -s “/home/user/Downloads/solr-8.1.1/example/cloud/node1/solr”

./solr start -cloud -p 7574 -s “/home/user/Downloads/solr-8.1.1/example/cloud/node2/solr” -z localhost:9983

Delete a collection

user@debian:~/Downloads/solr-8.1.1/bin$ ./solr delete -c rafaels_cloud

{

“responseHeader”:{

“status”:0,

“QTime”:272},

“success”:{

“127.0.1.1:8983_solr”:{“responseHeader”:{

“status”:0,

“QTime”:55}},

“127.0.1.1:7574_solr”:{“responseHeader”:{

“status”:0,

“QTime”:78}}}}

Deleted collection ‘rafaels_cloud’ using command:

http://127.0.1.1:7574/solr/admin/collections?action=DELETE&name=rafaels_cloud

user@debian:~/Downloads/solr-8.1.1/bin$

Start Cloud Mode all defaults NO PROMPT

./solr -e cloud -noprompt

Restarting nodes

bin/solr restart -c -p 8983 -s example/cloud/node1/solr

To restart node2 running on port 7574, you can do:

./solr restart -c -p 7574 -z localhost:9983 -s example/cloud/node2/solr

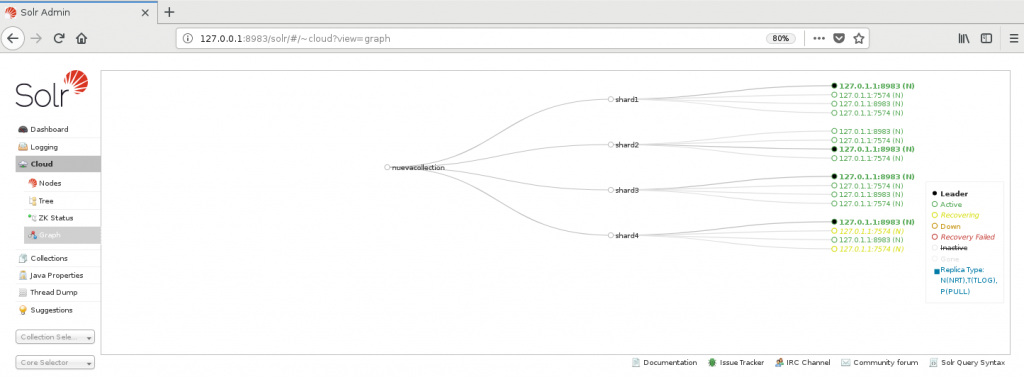

Types of Replicas

By default, all replicas are eligible to become leaders if their leader goes down. However, this comes at a cost: if all replicas could become a leader at any time, every replica must be in sync with its leader at all times. New documents added to the leader must be routed to the replicas, and each replica must do a commit. If a replica goes down, or is temporarily unavailable, and then rejoins the cluster, recovery may be slow if it has missed a large number of updates.

These issues are not a problem for most users. However, some use cases would perform better if the replicas behaved a bit more like the former model, either by not syncing in real-time or by not being eligible to become leaders at all.

Solr accomplishes this by allowing you to set the replica type when creating a new collection or when adding a replica. The available types are:

NRT: This is the default. A NRT replica (NRT = NearRealTime) maintains a transaction log and writes new documents to it’s indexes locally. Any replica of this type is eligible to become a leader. Traditionally, this was the only type supported by Solr.

TLOG: This type of replica maintains a transaction log but does not index document changes locally. This type helps speed up indexing since no commits need to occur in the replicas. When this type of replica needs to update its index, it does so by replicating the index from the leader. This type of replica is also eligible to become a shard leader; it would do so by first processing its transaction log. If it does become a leader, it will behave the same as if it was a NRT type of replica.

PULL: This type of replica does not maintain a transaction log nor index document changes locally. It only replicates the index from the shard leader. It is not eligible to become a shard leader and doesn’t participate in shard leader election at all.If you do not specify the type of replica when it is created, it will be NRT type.

Combining Replica Types in a Cluster

There are three combinations of replica types that are recommended:

All NRT replicas

All TLOG replicas

TLOG replicas with PULL replicasZooKepper tool examples

/home/user/Downloads/solr-8.1.1/server/scripts/cloud-scripts/zkcli.sh

Examples:

zkcli.sh -zkhost localhost:9983 -cmd bootstrap -solrhome /opt/solr

zkcli.sh -zkhost localhost:9983 -cmd upconfig -confdir /opt/solr/collection1/conf -confname myconf

zkcli.sh -zkhost localhost:9983 -cmd downconfig -confdir /opt/solr/collection1/conf -confname myconf

zkcli.sh -zkhost localhost:9983 -cmd linkconfig -collection collection1 -confname myconf

zkcli.sh -zkhost localhost:9983 -cmd makepath /apache/solr

zkcli.sh -zkhost localhost:9983 -cmd put /solr.conf ‘conf data’

zkcli.sh -zkhost localhost:9983 -cmd putfile /solr.xml /User/myuser/solr/solr.xml

zkcli.sh -zkhost localhost:9983 -cmd get /solr.xml

zkcli.sh -zkhost localhost:9983 -cmd getfile /solr.xml solr.xml.file

zkcli.sh -zkhost localhost:9983 -cmd clear /solr

zkcli.sh -zkhost localhost:9983 -cmd list

zkcli.sh -zkhost localhost:9983 -cmd ls /solr/live_nodes

zkcli.sh -zkhost localhost:9983 -cmd clusterprop -name urlScheme -val https

zkcli.sh -zkhost localhost:9983 -cmd updateacls /solr

DEBUG

Start with verbose (DEBUG) looging

bin/solr start -f -v

Start with quiet (WARN) logging

bin/solr start -f -q

LOG LEVELS

FINEST Reports everything.

FINE Reports everything but the least important messages.

CONFIG Reports configuration errors.

INFO Reports everything but normal status.

WARN Reports all warnings.

SEVERE Reports only the most severe warnings.

OFF Turns off logging.

UNSET Removes the previous log setting.

Set the root logger to level WARN

curl -s http://localhost:8983/solr/admin/info/logging –data-binary “set=root:WARN”